In the movie adaption of Moneyball, Michael Lewis’ book about the Oakland Athletics, a nebbishy Jonah Hill describes the concept of moneyball like this to Brad Pitt’s beleaguered Billy Beane: “People who run ball clubs, they think in terms of buying players. Your goal shouldn’t be to buy players, your goal should be to buy wins. And in order to buy wins, you need to buy runs.” The goal for a small-market team like the A’s is to buy wins efficiently: to spend the least money for the most runs.

A similar approach is now ascendant in political campaigns. The goal for campaign professionals is to maximize not runs per dollar but votes per dollar. And discovering the strategies and tactics that accomplish this goal has been as disruptive in campaigns as it has been in baseball.

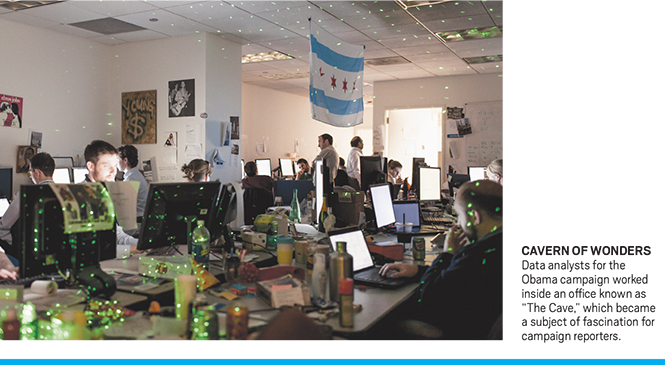

If the many media accounts of the 2012 presidential campaign are to be believed, Barack Obama’s victory can be credited to his campaign’s reliance on moneyball. A Time magazine headline from that November captured the hype: a “Secret World” of “Data Crunchers,” it said, masterminded Obama’s win. Soon after Election Day, Republicans trooped to a Harvard election postmortem to, as one Republican put it, go “to school” on the Obama campaign. The Republican National Committee released a report vowing to correct the party’s shortcomings in data, digital media, and field operations.

The innovations of moneyball in politics and the way the Obama campaign deployed data are real and important. But those innovations have not gone as far as the hype says they did. Can political campaigns use data to predict exactly how we’ll vote? Can they subtly manipulate our votes? Did data crunching really put Obama back in the White House?

The answer to all those questions is “not really.” A CNN story from late 2012 declared, “Campaigns know you better than you know yourself.” Hardly. We still know ourselves quite a bit better than campaigns do. And what was innovative in the Obama campaign was not necessarily decisive. Moneyball did not win the election. Even Obama’s data crunchers will freely tell you that.

But moneyball is how campaigns will increasingly be waged in the future. Basing campaign decisions on data is certainly a lot better than basing them on gut instinct or hunches, even if those hunches are occasionally right. While the techniques are being honed and tested, though, it’s worth learning more about what moneyball in campaigns can and can’t do to affect how we vote. It’s worth learning, in other words, just how powerful a campaign tool moneyball is or is not.

Innovations have not gone as far as the hype says they did. Can political campaigns use data to predict exactly how we’ll vote? Can they subtly manipulate our votes? Did data crunching really put Obama back in the White House?

“BIG” DATA AND MICRO-TARGETING

Candidates have always tailored their messages to fit their audience. DeWitt Clinton, who ran against James Madison, made speeches supporting the War of 1812 at campaign stops in the South and West, and others denouncing the war at stops in the Northeast. So targeting is nothing new. What is new is how precisely campaigns can target. Rather than target by geography—region, county, precinct—Republican and Democratic campaigns now routinely target individual voters. Both parties have collected information about every American voter from state voter files and consumer databases.

While the term Big Data is often attached to these databases, in reality they are not that big. Dan Wagner, the chief analytics officer for the Obama campaign and now head of Civis Analytics, estimates that the campaign’s master data file was about 15 terabytes, or about 15 common desktop hard drives. The firm Catalist, a major data vendor for progressive and Democratic campaigns, currently has more than two petabytes of data, or 2,000 terabytes. For the sake of comparison, Google was processing 20 petabytes of data per day in 2007. The scale of campaign data has undoubtedly grown, but it is not remotely “big” by the standards of the tech world.

Even this relatively small Big Data, though, is useful. In 2012, one new and creative use of targeting was the Obama campaign’s “Optimizer,” which matched targeted voters to data about what television programs those voters tended to watch. The Obama campaign could then buy advertising time during those programs on smaller cable channels like TV Land, whose advertising rates are cheaper. This is the essence of moneyball in campaigns: pay less money to reach more of the right voters.

Just how precise and effective Big Data can be is nevertheless frequently overstated. As micro-targeting was gaining ground in 2004, The New York Times Magazine declared that “The Very, Very Personal Is Political,” and suggested that campaigns may make decisions based on the newspapers you read, the type of computer you own, and the style of clothes you shop for. In 2012, news reports suggested that the beers you drink or the television shows you watch would be similarly useful to campaigns.

In reality, consumer data is of limited utility. Within the data campaigns have, the best predictor of whether you will vote in an election is whether you voted in past elections. And the best predictor of how you’ll vote is not your choice of beer but your party registration. Consumer data adds little beyond basic demographics. Yale political scientist Eitan Hersh has shown that after accounting for race, income, and gender, factors like whether you own a dog or play golf do not explain much about your politics.

Both Democratic and Republican consultants who build targeting models agree. Dan Wagner has dismissed the importance of data about voters’ magazine subscriptions and cited the higher value of voting history and basic demographic information. Alex Lundry, the chief data scientist for the GOP micro-targeting firm Targetpoint and a senior strategist for the Romney campaign, told us, “Despite its sex appeal to the media, for the most part consumer data is only helpful on the margins.” Of course, if a campaign is won at the margins, then that consumer data could be helpful. But for the most part, Lundry says, its importance is “woefully overstated.”

Another misunderstanding concerns the accuracy of targeting. Although the information that does exist in state voter files about age, race, gender, and party registration is highly accurate, many states do not collect data about one or more of these characteristics, leaving campaigns to guess at something as crucial as race for millions of voters. And guessing is hard. Eitan Hersh matched predictions from Catalist to actual survey data, and found that the Catalist predictions of race were accurate 90 percent of the time for whites—but only 73 percent of the time for Hispanics, and 68 percent for blacks. It was even harder to predict religion: Catalist predictions correctly identified Jewish voters 25 percent of the time and Catholic voters 38 percent of the time. When we asked Wagner about the modeling that the Obama campaign did to predict the race of voters in 2012, he told us that he was “really worried all the time” about misclassifying the race of voters.

A final misunderstanding is whether, once campaigns have isolated important subsets of voters, those people will be persuaded by micro-targeted communications—that is, by messages crafted specifically for them. In The Victory Lab: The Secret Science of Winning Campaigns, Sasha Issenberg’s book about the moneyball movement in campaigns, he describes how the 2004 Bush campaign targeted “Hispanic women in New Mexico” with a message about education, “environmentally minded Pennsylvania moderates” with a message about the environment, and “swing voters” with a visceral message about September 11th and terrorism.

But broadly targeted messages may work better than micro-targeted messages. In 2010 and 2011, Hersh and University of Massachusetts political scientist Brian Schaffner asked people to evaluate a hypothetical candidate, “Williams,” after showing them a piece of mail that this candidate “sent” to voters. Different random subsets of people saw different pieces of mail. One mentioned that Williams would fight for a broad group, the middle class. Other subsets saw mail in which Williams vowed to fight for smaller slices of the electorate: Latinos, unions, or religious conservatives. If micro-targeted messages work, Latinos, union members, and religious conservatives should have reacted more favorably when Williams promised to fight for them. But Hersh and Schaffner found that these groups typically did not. The authors also found evidence of backlash when the mail was mis-targeted. For example, when non-Latinos saw the mail advertising Williams’ commitment to Latinos, they liked him less. Given the challenge of correctly predicting attributes like race and religion, such mis-targeting is very much a reality.

Of course, this was just one set of experiments with a hypothetical candidate. Are things different in the real world of campaigning? Not necessarily. In 2012, the Obama campaign famously experimented with fundraising emails to its supporters—trying out different messages, subject lines, formats, and more. Because it had information about these supporters from the voter file and elsewhere, it could see whether certain kinds of supporters responded more to certain kinds of emails. This generally wasn’t the case. “Usually the winning email was universal,” Obama’s director of digital analytics, Amelia Showalter, told us.

Similarly, the Obama campaign was experimenting with different scripts that volunteers would use when contacting persuadable voters. Some scripts were targeted at specific demographics and some simply emphasized the campaign’s broader economic message. Which was more effective? Obama’s national field director, Jeremy Bird, told us, “Almost every test that I saw, the broad economic message was winning.”

MAD SCIENTISTS IN THE WAR ROOM

For a campaign that wants to target voters precisely, more data is usually better. But campaigns also want to do something else, something Big Data alone cannot accomplish: figure out what really moves voters. This is where randomized controlled experiments—the “lab” of The Victory Lab—have become crucial. Hundreds of experiments have taught campaigns how best to motivate potential voters to cast a ballot, largely thanks to the work of political scientists and the Analyst Institute, a group that conducts experiments on behalf of left-leaning groups and candidates.

In 2012, the Obama campaign often relied on experimentation, perhaps most famously with its fundraising emails. Amelia Showalter describes a chance discovery—later confirmed in testing—that when the campaign asked people with no donation history to give only a small amount like $3 and sent them to a page where the smallest default amount was $10, most would give $10 rather than writing in $3. For people who had donated before, the team calibrated the amount they asked for based on each supporter’s donation history. They also discovered that asking donors for odd amounts like $59 or $137 was more effective than asking them to give in amounts rounded to the nearest $5 or $10.

Perhaps an even more important experiment—though one that has received much less attention—occurred in February 2012 and involved a particularly difficult question: could the Obama campaign actually persuade people to vote for him? However universal a campaign goal, there have been few rigorous experiments of persuasive messages. In general, randomized controlled experiments in campaigns have focused on whether people vote at all and how to help get them to the polls, not who they vote for. That’s effective and relatively cheap: Voter turnout is part of a public record. Persuasion is harder to achieve, and expensive to measure: voter choices are secret, and can be measured only by polling.

That February, the Obama campaign randomly assigned a sample of registered voters regardless of party registration to a control group or a treatment group. It then invested a massive amount of volunteer effort—approximately 300,000 phone calls—to reach the treatment group with a message designed to persuade them to vote for Obama. Two days later, the campaign was able to poll about 20,000 of these voters and ask how they planned to vote. The phone calls worked: People who were called were about four points more likely to support Obama. The effect was so large that Obama’s director of experiments, Notre Dame political scientist David Nickerson, calls it “stunning.”

Of course, the Obama campaign did not assume that a single phone call in February had locked up these voters. The main contribution of the experiment—what Nickerson now calls “one of the big innovations”—was the model the Obama analytics team then built. This model was not like the models that both Democratic and Republican campaigns typically construct, which generate scores representing how likely you are to vote for the Republican or the Democrat. The February experiment generated a statistical model of “persuadability”—how likely you were to change your mind when contacted by the campaign.

But such innovations have led to the mistaken notion among some commentators that the Obama campaign “tested everything.” That is far from the truth. It is true that the Obama campaign was surveying about 10,000 voters per night in the heat of the campaign, and using this massive tracking poll to identify the impact of its advertising and other electioneering, in order to determine where to keep spending money and where to stop. But looking at shifts in tracking polls is not the same thing as a true experimental test, as the Obama campaign fully understood. Wagner told us, “You can’t test every single thing as it happens.”

What were some things that went largely untested? One was the basic message of the campaign—that Obama had made important strides despite the terrible economy he inherited, that he was more in touch with the middle class than Mitt Romney, and so on. This message was crafted using the traditional tools of campaigns—polling and focus groups and even what one dedicated data cruncher called, using a phrase that sounds distinctly unlike moneyball, “common sense.” Testing also played little role in fundraising that didn’t involve emails: The money was flowing in, so no one wanted to risk spending some of that money on experiments that might or might not add to the campaign’s coffers.

Perhaps most notable is that the campaign’s biggest expense, television advertising, was subject to very little testing. This is no strike against the Obama campaign. Testing TV advertising in the field is very difficult: campaigns are understandably reluctant to treat certain television markets as a control group, and thereby allow the opponent to run ads unopposed in those markets. There are only two published field experiments involving television advertising in the political science literature. Nevertheless, Obama strategists expect more experiments with television advertising in the future. “It’s two-thirds of a campaign,” Nickerson says. “You’re not testing it?” Given current and expected innovations by satellite and cable-television providers, those tests will likely be able to target not just television markets but individual television sets.

Experiments involving TV advertising have a long way to go. Even campaigns that target ads to individual television sets may not be able to know who, if anyone, is sitting in front of the television when the ads air—or whether the person who later answers the phone for a poll to measure the ads’ effects is the same person. Such experiments may not save campaigns more money than the experiments themselves cost.

The best predictor of how you’ll vote in an election is not your choice of beer but your party registration.

TARGETED SHARING

Targeting voters might have been nothing new, however progressively sophisticated data analysis could make it. But there is something more innovative: what the Obama campaign called “targeted sharing”—using social networking to spread its message.

Heading into the general election, the Obama campaign lacked contact information for a substantial number of the voters it wanted to target, particularly younger voters. So it used Facebook to find them by searching the networks of registered supporters who had signed into the campaign using the social media platform. The campaign then asked those supporters to contact the targeted voters who were their friends. After the campaign, reporting on this sharing program described how “more than 600,000 supporters followed through with more than five million contacts,” to quote from a Time account (PDF).

Those sound like big numbers. But they are what one senior figure in the progressive analytics community calls “vanity metrics”: numbers that seem impressive but don’t reveal much about effectiveness. The crucial question is not how many contacts were made, but how many contacts actually produced the desired outcome: a vote for Obama. We don’t know the answer to that question, because there was no experimental test of the targeted sharing program. The program was developed late in the campaign and there was not much time, and perhaps not much incentive, to figure out whether it would work.

And so its impact remains uncertain, Amelia Showalter told us. “Just because we had millions of shares doesn’t mean that we turned out millions of votes,” she says. “I have no idea if the targeted sharing program got us an additional 100 votes or 100,000 votes.” If the results of a 2010 Facebook study are any indication, the degree is small: Extrapolating from what that study found, and assuming that there were five million contacts via targeted sharing, the campaign’s program mobilized only 20,000 voters—a tiny fraction of Obama’s five-million-vote margin.

Of course, the targeted sharing program could in fact have been more effective. The point is that we do not know. This gets to a broader challenge with testing the effects of social media. Existing platforms make it hard to do randomized controlled experiments. If you tweet, for example, Showalter says, “it’s just going to go to everyone”—not to a targeted group. She calls social media campaigning “an art, because it can’t quite be a science yet.”

Social media platforms may evolve in ways that allow testing. And certainly campaigns are going to push social media further. Jeremy Bird told us that it made little sense to have separate groups within the campaign for “field organization” and “digital media.” He views social media as a means of field organizing, just like the knock on your door from a campaign volunteer. Social media could indeed prove to be a cheaper way to mobilize people than a traditional get-out-the-vote campaign. We just don’t know yet.

THE FUTURE OF MONEYBALL

No one disputes that the culture of moneyball is ascendant in campaigns. But there is still a long way to go, and important obstacles in the way. Wagner put the constraints succinctly: “talent, time, and money.”

Talent is important because analytics and experimentation—constructing complicated models and experiments—require expertise. Showalter has a master’s from Harvard’s Kennedy School of Government and Nickerson a doctorate in political science from Yale. Developing such talent takes work and expensive education.

Without sufficient talent, mistakes will be made. Showalter describes how, early on, even the Obama campaign’s fundraising experiments weren’t being done as well as they could have been: The campaign was doing tests on only a dedicated portion of its list of supporters, rather than creating new treatment and control groups for each test. It was as if the campaign was testing different drugs but always on the same people. Showalter convinced the campaign to “re-randomize” supporters before each test.

Jennifer Green, the executive director of the Analyst Institute, is not sure that the people who typically run campaigns can evaluate talent in analytics. “A lot of the decision makers aren’t educated about statistics,” she says, “so if they’re not getting good data, they don’t know the right questions to ask.”

There would be a considerable irony if the fields of analytics and testing were increasingly populated with hacks and charlatans. The whole purpose of moneyball, after all, is to use hard evidence to push back against sheer salesmanship and the instinctive spending of large sums. But just like polling and any other aspect of campaigning, there may be little that stops people from hanging out a shingle and declaring themselves an expert.

Then there is the question of time. Campaigns are in a sprint to Election Day and have only one goal in mind: winning. Analytics and testing have to be put in service to this goal. The big February 2012 persuasion experiment clearly fit this bill, but other work may not. Experiments have to help campaigns make real-time decisions in order to get others in the campaign to buy in.

Finally, the need for money cannot be emphasized enough. In baseball, moneyball was a way for a relatively cash-poor team like Oakland to overachieve. In political campaigns, the moneyball approach is so far a luxury of well-funded campaigns, not the secret weapon of impoverished ones. The Obama campaign’s embrace of analytics and testing hardly made it analogous to the scrappy Oakland Athletics. Having raised nearly $1.1 billion, the campaign was more akin to the New York Yankees. People within the Obama campaign believe that its analytic and testing budget was well spent. Wagner says that the campaign’s analytics group was “generating our own value.”

But for other campaigns—particularly congressional, state, or local races—the calculation may be different. “People who run for mayor of New York City—they can do all this,” Nickerson told us. “I don’t know if Dubuque is really up to it.” The reason is that analytics cost money, just like anything else in a campaign, and the return on investment may not be worth it. If a campaign spends $100,000 to hire a micro-targeting firm, and the firm’s modeling saves the campaign $50,000 by preventing the campaign from wasting resources on the wrong voters, then the campaign has still paid $50,000 more than it saved.

Even gathering the necessary data to do analytics may be cost-prohibitive. The Obama campaign probably spent between $8,000 and $10,000 to do each nightly 10,000-person poll, which in turn provided the data it needed to refine and update their targeting models. “Electoral and advocacy campaigns have an uphill climb for good data,” Aaron Strauss, who formerly worked in analytics for the Democratic Congressional Campaign Committee, told us. This is very different from, say, a baseball team that has a wealth of statistics at its fingertips.

And experimental findings can have a short shelf life. Obama’s digital analytics team discovered that the effects of some tweaks to their emails seemed to wear off as the campaign went on. Elan Kriegel, Obama’s battleground-states analytics director, says that the implications of the campaign’s persuasion modeling changed over time: people who appeared persuadable in March were different from those who appeared persuadable in September and October. This is to say nothing of the challenge of translating findings across campaigns in different election years or in different states or districts. Showalter describes how Obama emails seemed to work better when they were shorter, but her counterpart on Elizabeth Warren’s Senate campaign discovered that they raised more money when supporters got long emails that Warren wrote herself. What worked for Warren, Showalter says, were “things that broke all the rules.”

There are a couple of ways in which poorer campaigns can benefit from wealthier campaigns that innovate in analytics: The rich campaign’s data and findings can be passed down and applied in lower-level races. In part, this can happen because the political moneyball enterprise is, as Wagner called it, a “party-centered project,” at least on the Democratic side. Kriegel told us that information that campaigns learn “doesn’t die anymore,” and noted that the Obama campaign’s data included information about voter contacts from the 1996 campaign. “If you’re a congressional candidate and you lost in 2010 and someone else is running in 2012 or 2014,” he says, that person “might win because of the data you collected in 2010.”

Findings from experimentation and testing can also trickle down to poorer campaigns, particularly when those findings are rooted in basic psychology. A number of experiments have shown that potential voters are more likely to show up on Election Day when a volunteer asks them to plan when they will vote, where they need to go to vote, how they will get there, and so on. The Analyst Institute’s “best practices” are, we were told, on campaign bulletin boards all over the country.

EVEN THE GURUS DON’T KNOW

After the election, reporting and commentary was full of what Jennifer Green calls “guru-ism”— hagiographic features like Rolling Stone’s profiles of “The Obama Campaign’s Real Heroes,” a.k.a. the “10 key operatives who got the president reelected.” But many gurus reject such characterizations. “What’s next,” Green told us, “is reining in the guru-ism.” Later she said, “I think the media articles about how this stuff is the ‘magic everything’ are creating a certain unrealistic optimism about what these strategies can do anytime in the near future.”

Realism means paying as much attention to a winning campaign’s failures as its successes. For example, in contrast to Obama’s 2012 persuasion experiment, a similar experiment in Wisconsin in 2008 appeared to have the opposite effect: voters contacted by the campaign became less likely to support the candidate, according to research by Georgetown’s Michael Bailey and Dan Hopkins and Harvard’s Todd Rogers, who formerly headed the Analyst Institute.

Being realistic also means acknowledging how hard it is to estimate the total impact of the campaign. There is a prevailing narrative that Obama won the 2008 and 2012 elections because of his campaign—its data, its models, its field operation, and so on. But Obama campaign staff members are far more circumspect. We put the question to them this way: in 2012, Obama had about a four-point margin of victory in the national popular vote. How much of that margin is attributable to the work that his campaign did? Dan Wagner said, “It’s hard to say.” David Nickerson said, “I don’t know.” Jeremy Bird said, “I don’t know the number at all.” Elan Kriegel said “two points”—and later qualified that with “at most.”

Evaluating the impact of the Obama campaign also depends on the appropriate comparison. Going back to moneyball’s baseball origins, Dan Porter, Obama’s director of statistical modeling, invokes the concept of “value over replacement player.” The effectiveness of a major-league pitcher, he says, would never be evaluated against something absurd like sending Porter himself to the mound (the equivalent of a hypothetical in which Obama didn’t run a campaign at all). It would be evaluated against a more plausible alternative, like putting an average major-league pitcher in place of the pitcher in question. So we should evaluate Obama’s moneyball-style campaign against a campaign that still spent plenty of money, aired plenty of ads, and contacted plenty of voters—but did so with less analytical sophistication. Ultimately, then, a campaign that was merely adequate might well have won Obama re-election.

There is another parallel here to the Oakland Athletics version of moneyball. Billy Beane famously said, “My shit doesn’t work in the playoffs.” In the playoffs, chance factors can overwhelm whatever advantages a team had accrued through moneyball. The same is even more true of elections. In baseball, there’s at least a best-of-seven series. An election is a one-time, sudden-death contest. The election-year economy and many other things were out of Obama’s and Romney’s control. Moneyball can make a campaign more efficient, but cannot always help the campaign win.

Fortunately for candidates, a real guru will tell them exactly that.

This post originally appeared in the January/February 2014 issueofPacific Standard as “Inconclusive Results.” For more, consider subscribing to our bimonthly print magazine.